Creating measures for complex services

Designing measures for complex services requires an approach that is different to logical service design. Here we go into how this works with systemic design, systems thinking and a complexity framework. Complex services are those that involve unpredictability, and often are people focused services. The public sector has a high proportion of complex services.

A complex service case study- Healthcare

Lets start by using a healthcare service as an example, a nice complex one! The current traditional measures are;

In our initial analysis of how the current service worked, we realised that the measures were created by managers with a particular paradigm;

- that all cases should be treated as the same, therefore we can compare each case to each other. We create average trends.

- we create standard ways to measure, that treat every demand as equal.

- we standardise demands into the service, we have a set of pre-defines problems and we fit all demands into that list.

- we measure time spent doing anything, and attempt to reduce it, regardless of its value.

- use set arbitrary targets.

- we challenge staff when they want to spend what managers think is too much.

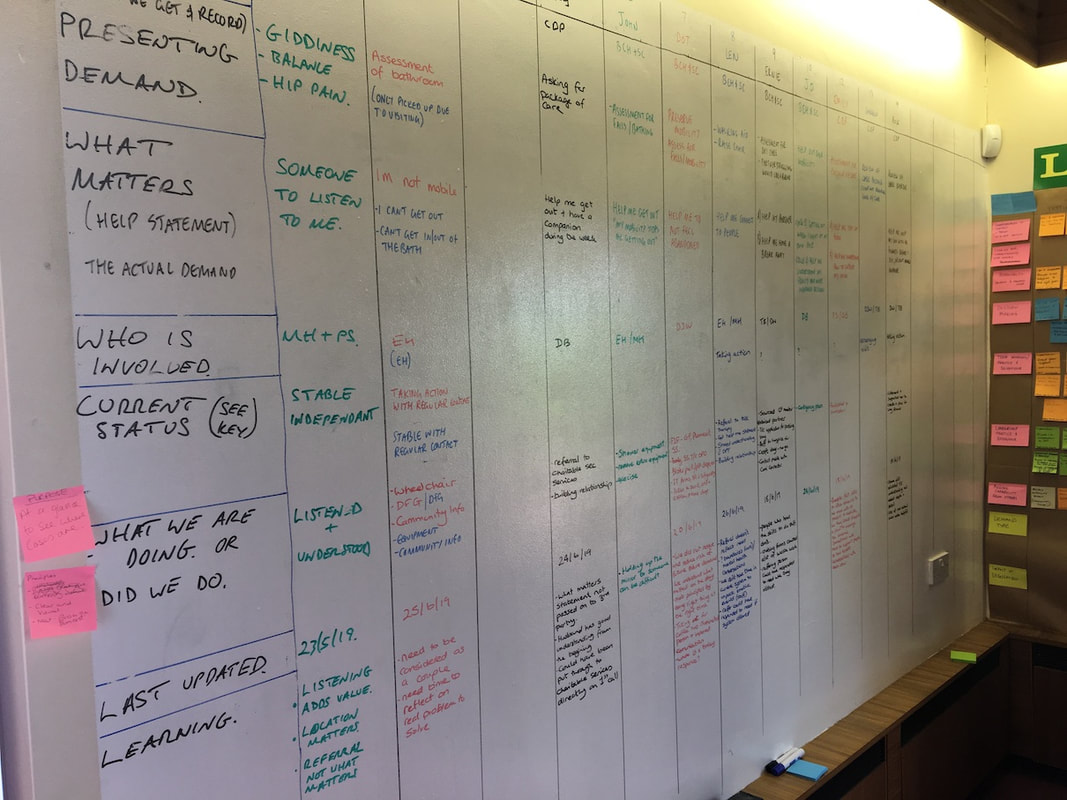

In our systemic Prototype Design, we want to use measures to help senior leaders to develop systemic understanding, and to demonstrate how well the new design prototype was working compared to the current way of working. And we want the focus to be a person-centred view of the service. The old measures fail to do this, and we have to create a new set. In the prototype, as we take on each case, we record as much information as we think we might need, and make it very visible.

- number of assessments achieved in the target (2 weeks), resources spend vs budget, number of complaints, number of staff sick, number of referrals to different departments, number of cases per worker per day, time taken to close a referral

In our initial analysis of how the current service worked, we realised that the measures were created by managers with a particular paradigm;

- that all cases should be treated as the same, therefore we can compare each case to each other. We create average trends.

- we create standard ways to measure, that treat every demand as equal.

- we standardise demands into the service, we have a set of pre-defines problems and we fit all demands into that list.

- we measure time spent doing anything, and attempt to reduce it, regardless of its value.

- use set arbitrary targets.

- we challenge staff when they want to spend what managers think is too much.

In our systemic Prototype Design, we want to use measures to help senior leaders to develop systemic understanding, and to demonstrate how well the new design prototype was working compared to the current way of working. And we want the focus to be a person-centred view of the service. The old measures fail to do this, and we have to create a new set. In the prototype, as we take on each case, we record as much information as we think we might need, and make it very visible.

I work with the team and team manager to decide what the new measures are going to be, and this takes time. What to measure was decided as a team and in the subsequent weeks they were tweaked as we learned more. And one team member showed flair to do this, so they became the one focused on this more than the others.

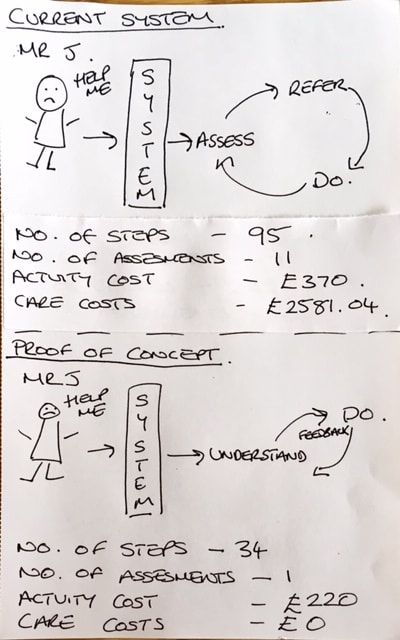

First we developed concepts of the old vs new ways of working. Below is one comparison of the the flow between the old vs new. We used this simple approach to start creating new measures based on a new set of principles. The team had never created measures before, so this has to start from first principles.

First we developed concepts of the old vs new ways of working. Below is one comparison of the the flow between the old vs new. We used this simple approach to start creating new measures based on a new set of principles. The team had never created measures before, so this has to start from first principles.

Deciding what to measure

To develop a new approach to measures, we need something that takes us away from what we have done before. So, we started with the main areas of; service and purpose, efficiency, revenue, morale (culture). This covers the main areas that measures should cover in any service.

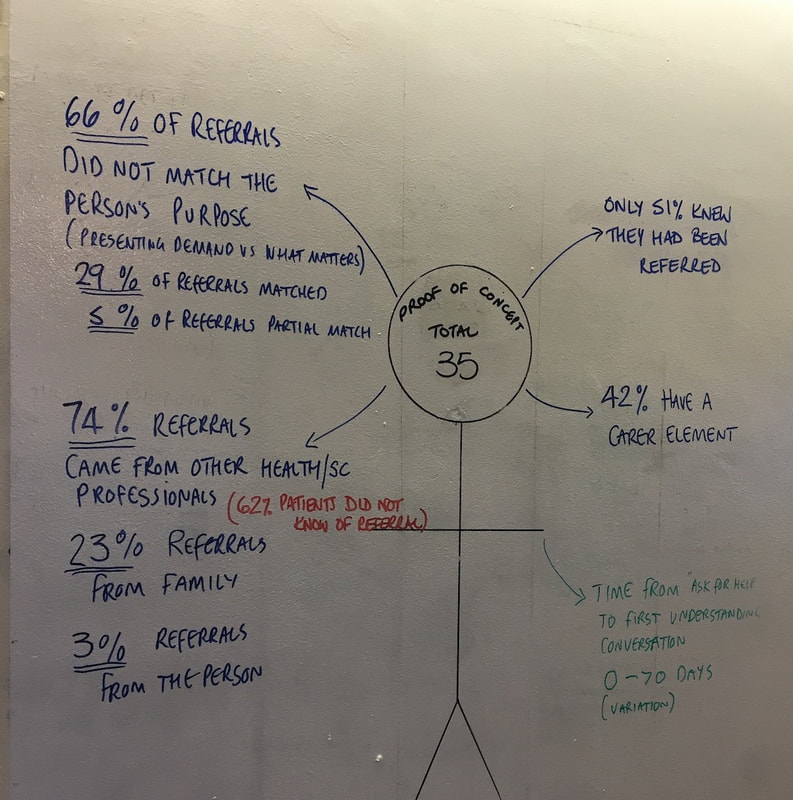

With a complex and high variety service like this, there is no true correlation with measurement when comparing one case to another, because of the complexity involved in this service. In fact, this is what the current method of measurement was doing and we found it to cause all sorts of bizarre inappropriate behaviours and decisions by staff and managers. However, when we take a random sample of cases, and compare those in the old way of working, with the new way of working, that difference can point to interesting characteristics of the prototype way of working.

PURPOSE

- Purpose was defined as

the what; 'help me to live a good life.'

the how; 'by listening to me, and helping me, and use what I have to help me do what I cannot do.'

- we recoded number of assessments as an indication of a poor approach to understanding need.

We measured purpose by their ability to live without unnecessary support from us, and any follow-up repeat demands.

- number of repeat demands, we recorded every time the citizen would contact a health professional anywhere in the system.

- feedback from visits, we would record their trust and depth of relationship with us.

- number of people connected to the citizen, this would indicate the fact that many different connections to the citizen causes splitting up of a relationship.

- matching the referral to the real needs of the citizen, how well did we originally assess their need, compared to what we eventually learned.

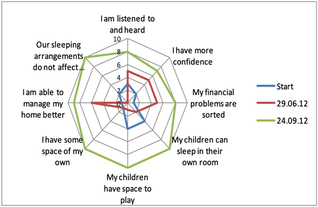

WHAT MATTERS

This was different for each person, and we asked them what this was. We recorded this down, and went back to these now and again, and it changed over time. What was important is that these guided us on how to proceed and what to focus on to help them achieve a good life.

The information contained here is in flux, it changes over time. So the question is; 'how appropriate is this information to be standardised and Digitalised?

With a complex and high variety service like this, there is no true correlation with measurement when comparing one case to another, because of the complexity involved in this service. In fact, this is what the current method of measurement was doing and we found it to cause all sorts of bizarre inappropriate behaviours and decisions by staff and managers. However, when we take a random sample of cases, and compare those in the old way of working, with the new way of working, that difference can point to interesting characteristics of the prototype way of working.

PURPOSE

- Purpose was defined as

the what; 'help me to live a good life.'

the how; 'by listening to me, and helping me, and use what I have to help me do what I cannot do.'

- we recoded number of assessments as an indication of a poor approach to understanding need.

We measured purpose by their ability to live without unnecessary support from us, and any follow-up repeat demands.

- number of repeat demands, we recorded every time the citizen would contact a health professional anywhere in the system.

- feedback from visits, we would record their trust and depth of relationship with us.

- number of people connected to the citizen, this would indicate the fact that many different connections to the citizen causes splitting up of a relationship.

- matching the referral to the real needs of the citizen, how well did we originally assess their need, compared to what we eventually learned.

WHAT MATTERS

This was different for each person, and we asked them what this was. We recorded this down, and went back to these now and again, and it changed over time. What was important is that these guided us on how to proceed and what to focus on to help them achieve a good life.

The information contained here is in flux, it changes over time. So the question is; 'how appropriate is this information to be standardised and Digitalised?

VALUE

This became evident as we worked with each person. Value is the agreed activities between the person and ourselves; those actions that directly helped them; talking and listening, guiding, making things happen, organising activities, building knowledge. We looked to their family environment, friends, community, and health.

What was NOT value was easier to see; writing records, report, asking for permission, applying for funds, creating plans.

The control was directed by the person, as opposed to the control derived by the organisation.

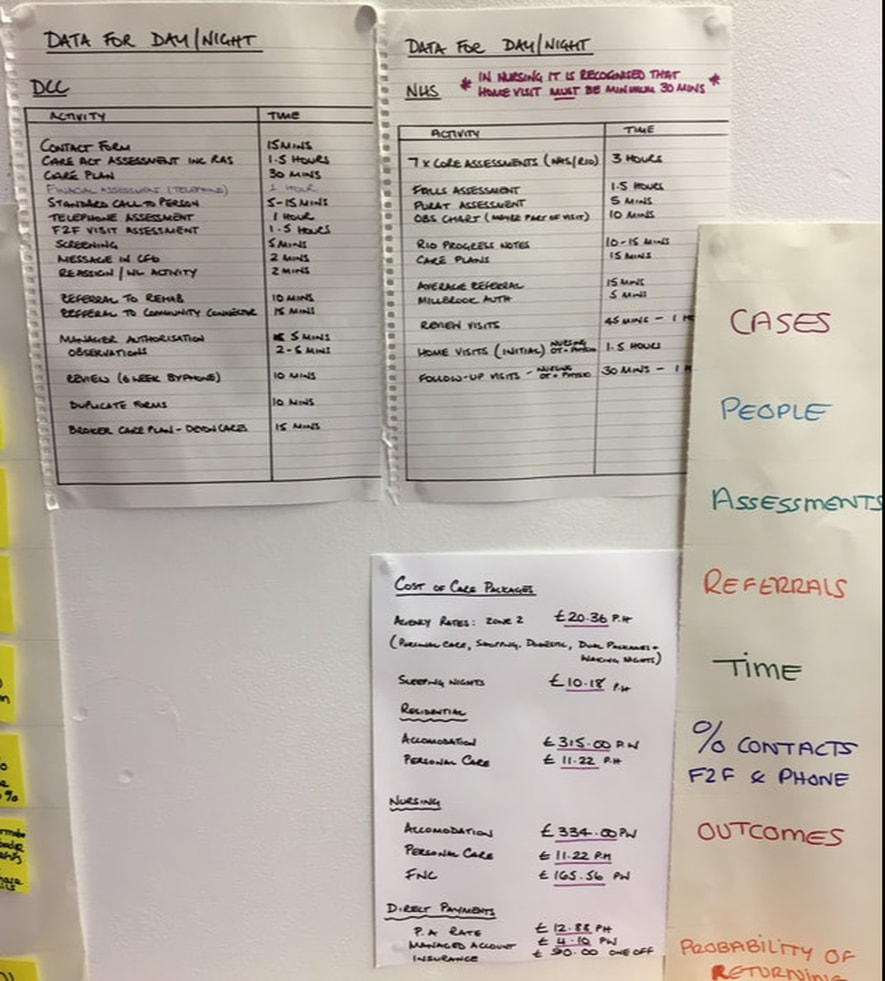

EFFICIENCY

The health service is not a transactional and standard service. Therefore efficiency in the traditional sense was not appropriate. Were had to use what we could measure to indicate efficiency.

- the number of handoffs & referrals, as these indicate waste in the duplication through handoffs.

- any non direct contact was recorded as non-value.

- repeat demand.

REVENUE (SPEND)

- real total time spent on a case. This indicated rough total cost, rather than actual cost.

- repeat demand, indicating that the cost calculation needed to continue with the new demand.

A really important element at this point was the team helping senior leaders to deframe their understanding of cost. The team showed how focusing on cost actually increased overall cost. And that what they were doing was understanding the causes of cost, and working on those to reduce cost.

MORALE

- Morale was described by the team directly in feedback to managers and leaders. It was evident in their dialogue, behaviour, and attitude.

This became evident as we worked with each person. Value is the agreed activities between the person and ourselves; those actions that directly helped them; talking and listening, guiding, making things happen, organising activities, building knowledge. We looked to their family environment, friends, community, and health.

What was NOT value was easier to see; writing records, report, asking for permission, applying for funds, creating plans.

The control was directed by the person, as opposed to the control derived by the organisation.

EFFICIENCY

The health service is not a transactional and standard service. Therefore efficiency in the traditional sense was not appropriate. Were had to use what we could measure to indicate efficiency.

- the number of handoffs & referrals, as these indicate waste in the duplication through handoffs.

- any non direct contact was recorded as non-value.

- repeat demand.

REVENUE (SPEND)

- real total time spent on a case. This indicated rough total cost, rather than actual cost.

- repeat demand, indicating that the cost calculation needed to continue with the new demand.

A really important element at this point was the team helping senior leaders to deframe their understanding of cost. The team showed how focusing on cost actually increased overall cost. And that what they were doing was understanding the causes of cost, and working on those to reduce cost.

MORALE

- Morale was described by the team directly in feedback to managers and leaders. It was evident in their dialogue, behaviour, and attitude.

Collecting the information

Each of the prototype cases were rigourously analysed with those working on each case.

We created new measures, based on the purpose of what we were doing, to prove the effectiveness of the new prototype; to demonstrate to leaders the difference between the old and new ways of working. They were going to be the basis of deciding if we continue or not. We were looking for meaningful information, rather than data that is easy to record.

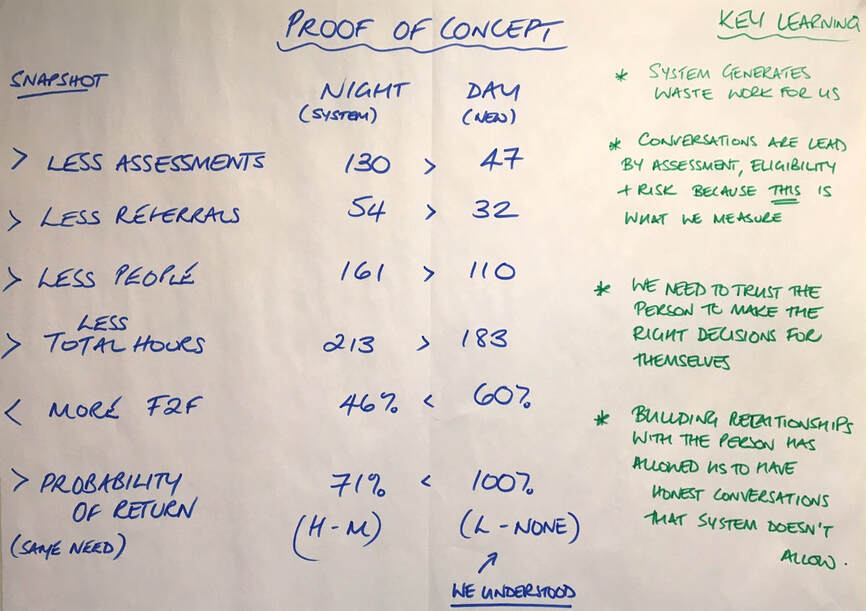

And finally, the summary that the team discussed with the leaders (night = current way of working, day = new way of working

How we used the measures

Why have everything on the wall? The impact of putting it on the wall was really profound and helpful. The team had the measures in the room with them at all times. The measures became theirs. The interpretation of the measures would develop over time, and became part of team discussions and reviews.

Those leading the methodology in the team, used the measures to bring staff together to collaborate to a common purpose. But the development of how this was achieved was a collaborative effort decided by the whole team. For a group that is used to top down decision-making, this new approach, led by Roxanne Tandridge, was a catalyst to develop a new team working.

At this stage in the design, perhaps the most important point to note here is that the feedback of the Prototype is to the decision makers, and it is not about a presentation, it is feedback to the group who have already been engaged with the team and the design.

The purpose of this measures aspect of the design was to link leaders with what was important to both the user, the business, and the leaders. And at this point, it appears we have reduced to numbers, but we have not. We use these numbers to describe the concepts. And thats why we do this face to face.

When the team went through this, the leaders basically fell off their chairs! Collated and discussed by front line staff, to the most senior people in the organisation. Job done!

Those leading the methodology in the team, used the measures to bring staff together to collaborate to a common purpose. But the development of how this was achieved was a collaborative effort decided by the whole team. For a group that is used to top down decision-making, this new approach, led by Roxanne Tandridge, was a catalyst to develop a new team working.

At this stage in the design, perhaps the most important point to note here is that the feedback of the Prototype is to the decision makers, and it is not about a presentation, it is feedback to the group who have already been engaged with the team and the design.

The purpose of this measures aspect of the design was to link leaders with what was important to both the user, the business, and the leaders. And at this point, it appears we have reduced to numbers, but we have not. We use these numbers to describe the concepts. And thats why we do this face to face.

When the team went through this, the leaders basically fell off their chairs! Collated and discussed by front line staff, to the most senior people in the organisation. Job done!